- News

- Reviews

- Bikes

- Components

- Bar tape & grips

- Bottom brackets

- Brake & gear cables

- Brake & STI levers

- Brake pads & spares

- Brakes

- Cassettes & freewheels

- Chains

- Chainsets & chainrings

- Derailleurs - front

- Derailleurs - rear

- Forks

- Gear levers & shifters

- Groupsets

- Handlebars & extensions

- Headsets

- Hubs

- Inner tubes

- Pedals

- Quick releases & skewers

- Saddles

- Seatposts

- Stems

- Wheels

- Tyres

- Tubeless valves

- Accessories

- Accessories - misc

- Computer mounts

- Bags

- Bar ends

- Bike bags & cases

- Bottle cages

- Bottles

- Cameras

- Car racks

- Child seats

- Computers

- Glasses

- GPS units

- Helmets

- Lights - front

- Lights - rear

- Lights - sets

- Locks

- Mirrors

- Mudguards

- Racks

- Pumps & CO2 inflators

- Puncture kits

- Reflectives

- Smart watches

- Stands and racks

- Trailers

- Clothing

- Health, fitness and nutrition

- Tools and workshop

- Miscellaneous

- Buyers Guides

- Features

- Forum

- Recommends

- Podcast

news

Tesla FSD Beta crashes into cycle lane (screenshot via YouTube/AI Addict)

Tesla FSD Beta crashes into cycle lane (screenshot via YouTube/AI Addict)Tesla using Full Self-Driving Beta crashes into cycle lane bollard...weeks after Elon Musk's zero collisions claim

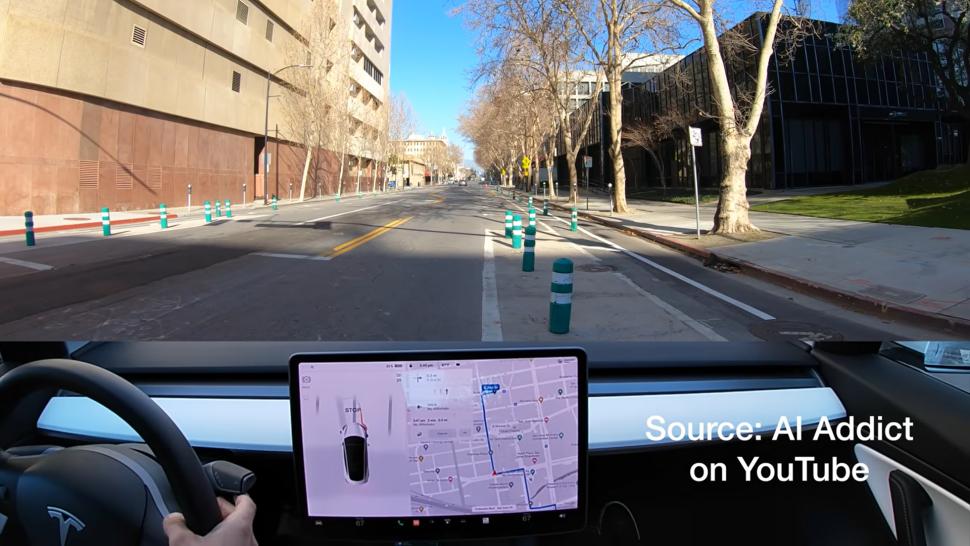

A Tesla using the Full Self-Driving Beta (FSD) has been caught on camera crashing into a bike lane, just weeks after Elon Musk claimed the programme had not been responsible for a single collision since its release in October 2020.

AI Addict, a San Jose-based YouTuber who regularly shares videos of FSD 'stress tests', showing the technology being used in real world settings, uploaded the footage of his Tesla crashing into a segregated cycle lane bollard while making a right turn.

Earlier in the video the vehicle can be seen running a red light, before later trying to make a turn into a tram lane.

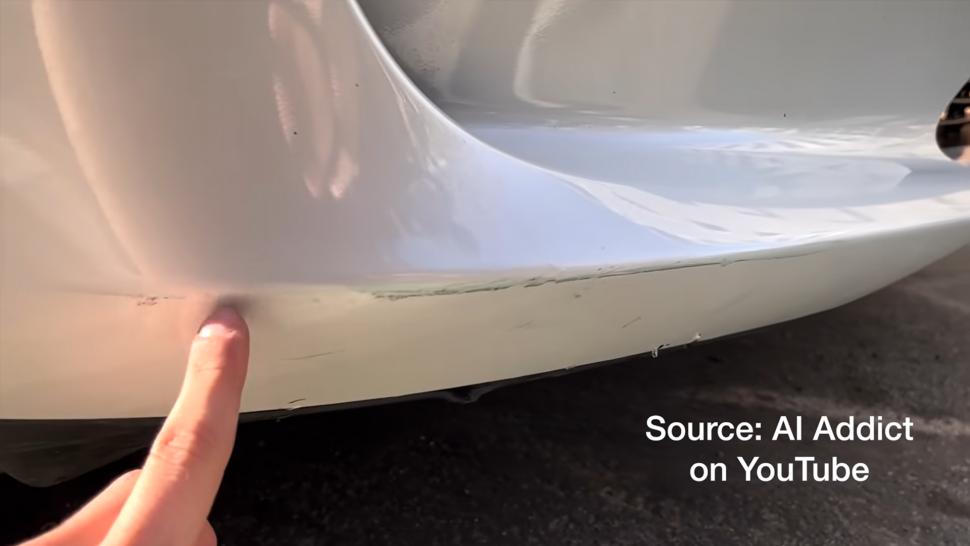

Commentating over the footage, the Tesla owner can be heard saying: "Changing lanes...Oh...S***. We hit it. We actually hit that. Wow. We were so close on the corner...I can't believe the car didn't stop."

In the next clip the damage to the front end of the Tesla, including a few scratches and scuff marks are shown to the camera.

"Alright, YouTube, it's confirmed I have hit that pylon. It's a first for me to actually hit an object in FSD," AI Addict explained.

Tesla says FSD is "capable of delivering intelligent performance and control to enable a new level of safety and autonomy".

It allows the vehicle to drive autonomously to a destination entered into the navigation system, and while the driver must remain ready to take control, it is as close to fully autonomous technology as the company has so far delivered.

The technology has been hotly debated, with co-founder and CEO Musk as recently as last month claiming it had not caused a single crash since its launch in October 2020.

In answer to Tesla shareholder Ross Gerber on Twitter, Musk simply replied "correct" when asked if the figure was correct.

Correct

— Elon Musk (@elonmusk) January 16, 2022

FSD is currently available to several thousand customers who have been deemed "safer drivers", selected by the company through its "safety test score".

The programme has been criticised by some who say it has given users autonomous features, but passes responsibility to the driver as it is still only a beta in testing.

Previous versions of Tesla's Autopilot technology have been questioned too. In 2018, a Tesla vehicle on Autopilot crashed into a police car in California. The woman 'driving' the car sustained minor injuries.

That incident came not long after a robotics expert from Stanford University said the semi-autonomous driving technology displayed a frightening inability to recognise cyclists.

One of the company's more recent controversies came in December when it was announced owners could now play video games while driving, following the latest update of the infotainment touchscreen.

The update was widely criticised by safety bodies in the US, who have already raised major concerns about the vehicles' Autopilot mode.

Dan is the road.cc news editor and joined in 2020 having previously written about nearly every other sport under the sun for the Express, and the weird and wonderful world of non-league football for The Non-League Paper. Dan has been at road.cc for four years and mainly writes news and tech articles as well as the occasional feature. He has hopefully kept you entertained on the live blog too.

Never fast enough to take things on the bike too seriously, when he's not working you'll find him exploring the south of England by two wheels at a leisurely weekend pace, or enjoying his favourite Scottish roads when visiting family. Sometimes he'll even load up the bags and ride up the whole way, he's a bit strange like that.

Latest Comments

- BikingBud 1 sec ago

But could you retro fit an 8 track player?

- Surreyrider 4 min 59 sec ago

Good try. Won't work.

- Surreyrider 7 min 27 sec ago

More than six times over the legal limit? She's the winner here. Her punishment is insufficient.

- Smoggysteve 21 min 15 sec ago

Sram only have a foot in the market with regards to road bike abd mountain bike (and loose variations of) The rest of the bicycles sold around the...

- mctrials23 39 min 43 sec ago

You have to have a grudging appreciation that someone so thick in the head has managed to get such a well paid and high profile job. Then again,...

- chrisonabike 49 min 51 sec ago

Don't worry, the tech bros are working hard on virtual shops as you type!

- wtjs 1 hour 36 min ago

so my hands are near the brake levers...

- PRSboy 3 hours 15 min ago

I came here on a small boat from the Road Cycling UK forum after that croaked a few yrs ago.

- A V Lowe 3 hours 55 min ago

The huge problem is the misues of the term pavement - I've cycled & driven cars on pavements for the past 61 years - their legal status is ...

Add new comment

28 comments

I'd much rather be cycling next to an AI driven car rather than a human motorist... the more AI on the roads then there will be far fewer deaths.

I also think AI driven cars have the capability to ease congestion by not doing dipshit things like sitting on keep clear areas and blocking exits.

Certain sections of the media will entirely lose their shit with the first few AI accidents so it will be the long haul to get people over the line. But once we reach the tipping point it will take over very quickly. My guess is 5-8 years.

However as a motorist, I'm not really interested in AI until it's fully capable. I don't fancy that my reactions will be quick enough to take over should an incident occur and my tiny mind is fully engaged on other thoughts.

Self-driving cars are a combination of a techno-utopian fantasy and a massive scam.

Full self-driving requires a computer to be able to instantaneously understand the road environment around it, and to have sufficient theory of mind to model what human road users, both in and out of other cars, are going to do. That's strong artificial intelligence, that is, a computer that literally thinks and understands the world like a human.

We're currently so far from that the whole idea of self-driving cars would be a big joke if the car industry with the prize pillock Elon Musk in the vanguard hadn't conned regulatrs into already letting these things loose on the roads.

This comment on BoingBoing's story about Tesla being ordered to disable its

aggressiveassertive self-driving mode is insightful and deeply scary. Some highlights:"Self-driving consists of a couple of dozen of what we call Hard Problems in computer science. These are problems for which the solution can’t really be calculated, only approximated. The whole system is probabilities. It’s how all this “machine learning” stuff you hear about now works. Probabilities. Siri cannot identify the phrase “what time is it”. What it can do is take a long string of noise and map that to a series of likelihoods of that noise being “what time is it” or “play Weezer” or “call mom”. That’s all “machine learning” is. It is so much more primitive than the lay public thinks it is.

"None of this is ever EVER guaranteed. The car does not know there is a stop sign there. It knows there is an 82% chance that this frame of video contains a stop sign and a 71% chance that it is in our lane and an 89% chance it is close enough that we should consider stopping for it.

"Because the algorithms are never sure about anything (and to be clear, never will be) you need “fudge factors”. How sure is sure enough that it’s a stop sign and we should stop? If you set it 100%, the car will run every single stop sign. If you set it to 85%, the car will stop randomly in traffic at red birds and polygonal logos on signs. There’s no answer that works in every situation so they are beta testing these sets of fudge factors to see which works best. … This technology is not safe.

"All of this should, of course, terrify you. These cars should not be on the road, and no ethical AI engineer would say otherwise. You know all those times Siri misunderstood you? With a self-driving car, someone just died."

Though arguably there are plenty of human drivers already on the roads who can't do that with any great degree of success, so...

Tesla publish their collision stats every quarter. I believe this one is a summary from Q3 2020.

"In the 3rd quarter, we registered one accident for every 4.59 million miles driven in which drivers had Autopilot engaged. For those driving without Autopilot but with our active safety features, we registered one accident for every 2.42 million miles driven. For those driving without Autopilot and without our active safety features, we registered one accident for every 1.79 million miles driven. By comparison, NHTSA’s most recent data shows that in the United States there is an automobile crash every 479,000 miles.”

As we all know, pulling the first factoid that supports your argument out of the internet's arse is not exactly good data review, and there are any number of confounding factors and caveats in any set of data. We also have to assume that the data that Tesla has presented has gone through some sort of sanity check and peer review and is at least broadly factual. However, from the top line figures it would appear to be demonstrable that AI controlled cars are already considerably better at the task than human drivers and given the ability to rapidly share "knowledge" from every real life incident will almost certainly become even better over time.

'Autopilot' isn't the same thing as self-driving, though - it's a form of driver-assist, no? So would occasions where the car wanted to do something stupid but was overruled by the (human) driver even show up in those figures, given that no accident resulted?

I recall @Rich_cb and I discussing these stats some time ago and there's definitely some cherry-picking going on though I forget the details - something to do with NHTSA's data covering a much wider range of vehicles and situations which made the comparison void.

I think the issues are raised here: https://www.businessinsider.com/tesla-crash-elon-musk-autopilot-safety-data-flaws-experts-nhtsa-2021-4?r=US&IR=T

I do appreciate your points, but I'm still going to go with the optimism that safe driving is not an unsolvable problem. I think the failings of Tesla are more to do with just using machine vision (via ordinary cameras) rather than building up a true 3-d picture of their surroundings with lidar/sonar/radar etc. Ultimately, it is possible to make a bat's brain (with the help of a mummy bat and daddy bat that love each other very much) that is capable of using sonar to navigate in the dark and that brain is tiny. Now obviously, we're nowhere close to achieving the same kind of performance in silicon, but I don't see why it can never be done.

However, Musk is a showman and I don't believe what he says.

I'm slightly wary of self-driving AI - primarily for other reasons more to do with how humans use this e.g. who benefits, who regulates and what happens when it goes wrong etc. However your quoted comment from an article somewhere seems to be conflating philosophy with engineering / practicalities. It may startle that commentor to know that the human brain itself is likely doing exactly what they critique computers for doing a bad job at e.g. tackling "... problems for which the solution can’t really be calculated, only approximated. The whole system is probabilities..."

There's lots of debate on the larger question (e.g. does the "hard problem" even exist or does it arise e.g. due to human framing of our own "beliefs" about what we think we do when we think). However the more we know the clearer it is that for many human activities we operate a lot of more or less flaky heuristics to achieve our complex behaviours. True, we almost certainly do the low-level computation differently than computers compute (numbers are likely not a feature) but a "fuzzy computation" it certainly is. This is readily apparent when things go wrong.

The point is not "but robots!" As we're aware on this forum humans have an equivocal record for safely travelling on the roads. Machines are already better at many specific tasks than humans including some complex ones. They also tend to avoid some of the common human failings (forgetting, being distracted, emotional or tired). It's more "how can we be sure this handles enough of the vagaries of the 'open world' environment we're letting them free in?"

"Brute simplicity" was certainly a valid critique of early generations of AI. But that study had to start with a very reductionist view of something so complicated just to get a handle on the questions to ask.

While humans certainly have a keen interest in what others think / believe and have complex systems dedicated to that * I'm not sure that's proof you need any kind of detailed model of a human brain in the system. I'm not convinced that human drivers are doing something irreducably complex to do with understanding of others when driving. If you ask them - when they have time to think - what they thought their decision-making process was they may explain it as that. I suspect this just shows the limits of our introspection into our own processes.

We likely rely on "prediction" - or rather our experience, which could be said to be our own "machine learning" - to a greater extent than computers do simply because our reaction and processing times are much longer. Certainly engaging higher-order conscious reasoning would result in results arriving too late to help in many cases.

* The details are fascinating - very young children seem to have some mental machinery primed to start teaching itself about others. Some of this may be shared or have evolved independantly in other animals too.

You know all those times a human misunderstood you?

With a human driven car someone just died.

Self driving cars don't have to be perfect, they just have to be safer than humans.

Every mile they drive they get better and will continue to do so. I would argue it's unethical to not develop the technology given the potential harm reduction it offers.

Fully self driving taxi services are now running in two US cities so I'm not sure they're fantasy any more either.

Musk is a P. T. Barnum character. I wouldn't really trust his opinion on the actual state of readiness of Tesla's self driving algorithms. However, the Tesla AI will actually learn the lessons and not just for that one vehicle but for all Teslas that receive updated code based on real life incidents.

To Be Fair, the comments about the ability to recognise cyclists was in a different age as far as this technology is concerned.

On the other hand statistics about the lack of blame in collisions is fraught with errors in that no car has been driven continuously on FSD the car picks the easy bits and leaves the driver to override it whenever things get tricky.

It is amazing technology but still has a long way to go. Elon Musk repeated the saying that when you are 90% of the way to solving something like autonomous driving you still have 90% of the work to do. I think the true figure might be more like 99% when you are dealing with life and death.

Watched it. There was no staring eyed rage at anything in it's way, so I'm still going for AI in preference to BMW

It's still safer for cyclists than Standard UK BMW or Audi Driver!

Beta Software in crash shock.

*tesla* So basically - you can have this functionality if you help test it.

*tester* - Lets treat it as production code. OMG it crashed.

All it shows is the tester is a twat.

Musk is a twat too btw....

Well, no - it also shows that allowing things to be public beta tested on public roads isn't a great idea.

Everybody has a testing environment. Some people are lucky enough to have a separate environment to run production in.

Isn't this always the problem of "what do you get if you cross {thing} with a computer? A computer."? e.g. if everything is effectively software then we'll get the practices of that business. Although I'm sure no company has ever made even its lowest-priority users act as beta (or even alpha) testers ...

Remembering the lead-in-petrol story you highlighted perhaps it's not unique to software. I think this does change things though because now technological change occurs even closer to the speed of fashion and a "fix as we go" mindset is the norm. I'm recalling as a counterpoint to that the Brooklyn Bridge where the chief engineer said that due to uncertainties - e.g. what they didn't know they didn't know - he designed some parts with a safety factor of several times the required strength they calculated. Which turned out fortunate as they were supplied some sub-spec materials and no-one had much idea of the aerodynamics of such structures at that time. Obviously Teslas are not designed to last that long - indeed will have obsolescence built-in!

Before the computer age, iterating designs/production/manufacturing was an expensive and laborious task, so more attention was given up-front (e.g. measure twice, cut once). However, with software, it can be very cheap to throw together a prototype, muck around with it, bin it and then start again. This fits in with the "move fast and break things" ideology as most companies can easily manage having a bit of down-time and will then hopefully prevent that same mistake happening again.

None of that fits in very well with safety-critical engineering which is what Tesla should be doing as there's a world of difference between a website being down and a pedestrian/cyclist being knocked down.

Well we've always prototyped - and still do extensively in some domains (firearms come to mind). However I think the existing pressures to spend less time at that point is increasing. Also having more than a hazy overview becomes harder as the software increases in complexity and the degree of connection between these systems increases. Yes, the death of the expert generalist was supposedly almost 200 years ago...

Completely off-topic now but I find this domain interesting. If you're not already aware Tim Hunkin has some interesting musings about the process of "design" and technology - his and others - as well as some amusing machines. A place to browse around.

Elon Musk was not a co-founder of Tesla. Tesla was founded in July 2003 by Martin Eberhard and Marc Tarpenning. He just pushed them out and now claims to be a co-founder just like he does with PayPa.

I didn't know that!

tbf to Musk on this. He bought them out 8 months after the company was founded (July 2003-Feb2004) and before a product had been released. Thats close enough for me. They needed piles of money. He had it.

They still have shareholdings and are likely crying all the way to the bank.

Elon Musk and JB Strauble had been working independantly on putting an electric power train derived from AC propulsion IP into a Lotus Elise before they met Eberhard et al.

It was more of a stealth mode merger than Elon being the first investor in a going concern.

Eberhard fucked up the development of the Roadster, Elon Musk replaced him as CEO found more funding to the keep the company going and the rest is as they say history.

The "secret master plan mk 1" is also mostly Elon Musk's work, the strategy of sports car, luxuary car, junior executive car, everything else plus the relentless execution is what differentiated Tesla and that is mostly due to Elon Musk's iron will setting the culture.

Awaiting comment from World Bollard Association™

let the hostilities commence!!!

I know what side I'm on

Should have gone with LIDAR

Surely MIFWARF? Man In Front Waving A Red Flag.