- News

- Reviews

- Bikes

- Accessories

- Accessories - misc

- Computer mounts

- Bags

- Bar ends

- Bike bags & cases

- Bottle cages

- Bottles

- Cameras

- Car racks

- Child seats

- Computers

- Glasses

- GPS units

- Helmets

- Lights - front

- Lights - rear

- Lights - sets

- Locks

- Mirrors

- Mudguards

- Racks

- Pumps & CO2 inflators

- Puncture kits

- Reflectives

- Smart watches

- Stands and racks

- Trailers

- Clothing

- Components

- Bar tape & grips

- Bottom brackets

- Brake & gear cables

- Brake & STI levers

- Brake pads & spares

- Brakes

- Cassettes & freewheels

- Chains

- Chainsets & chainrings

- Derailleurs - front

- Derailleurs - rear

- Forks

- Gear levers & shifters

- Groupsets

- Handlebars & extensions

- Headsets

- Hubs

- Inner tubes

- Pedals

- Quick releases & skewers

- Saddles

- Seatposts

- Stems

- Wheels

- Tyres

- Health, fitness and nutrition

- Tools and workshop

- Miscellaneous

- Cross country mountain bikes

- Tubeless valves

- Buyers Guides

- Features

- Forum

- Recommends

- Podcast

OPINION

Do 92% of cycling fatalities happen in low light conditions? We investigate an extraordinary claim

Researchers (CC BY-NC-ND 2.0 Sandra Carbajal|Flickr).jpg

Researchers (CC BY-NC-ND 2.0 Sandra Carbajal|Flickr).jpgImage: Researchers (CC BY-NC-ND 2.0 Sandra Carbajal|Flickr)

What do you do when a claim about cycling makes no sense? You find out where it's coming from and take it apart.

Recently, Gadgette, Huffington Post and the Guardian quoted a startling statistic. Lumo, a maker of cycling gear with built in lights for visibility, claimed that 92% of cycling fatalities happen in low light conditions.

If you take ‘low light’ as between one twilight and another, that number instantly sets alarm bells ringing to anyone familiar with cycling and cycling fatality data. Most crashes — and therefore most fatalities — happen during the hours of daylight, because that’s when people are cycling. The Department for Transport's 2013 report on cycling road casualties doesn’t even mention light conditions as a factor. Hard to believe nine out of ten cycling fatalities occurring at night would have got past the DfT.

I asked Lumo where the figure had come from. They told me: “the source was a study by Owens & Sivak (1996) in Human Factors 38(4) pp680-689”.

That paper turns out to be behind a paywall, as so many research papers are. A prominent cycling researcher came to my rescue with a method of accessing the paper, which you can read for yourself here.

‘Differentiation of Visibility and Alcohol as Contributors to Twilight Road Fatalities’ looked at the factors involved in fatal collisions in the USA, between 1980 and 1990, during the twilight periods of the day.

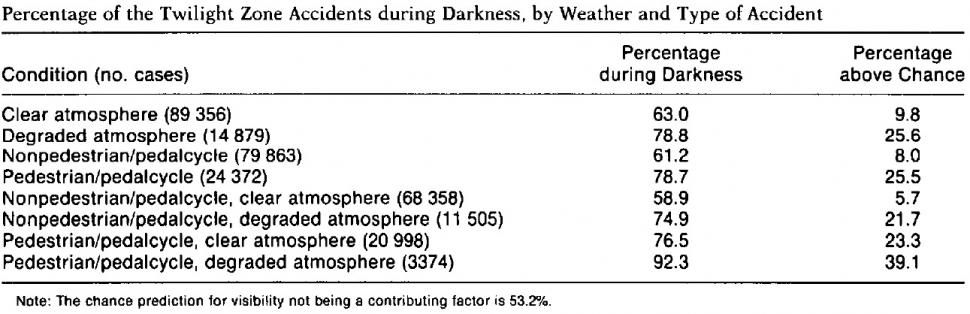

Lumo’s 92% figure appeared to come from this table:

However, Owens & Sivak weren’t looking at all fatal accidents, just those during twilight, and they were looking for the effect of the darker portion of the twilight period and of “degraded atmospheric conditions” — poor visibility. The 92% figure is therefore a

subset of a subset of a subset of a subset. It's the proportion (a) all fatal collisions that (b) had pedestrians or cyclists as victims (c) in twilight hours (d) in degraded visibility and that (e) occurred in the dark half of the twilight hours. And it groups together pedestrians and cyclists in a way that’s useful for their analysis but further reduces the study’s usefulness for teasing out factors in cycling fatalities.

In addition, the rate of fatal road crashes per distance is greater during darkness, as Owens and Sivak point out in their introduction, but there are fewer people on the road. They write: “In 1993, for example, the nighttime rate was 3.9 fatalities per 100 million vehicle miles, as compared with 1.2 for daytime. If the nighttime rate were lowered to match the daytime rate, the number of road fatalities in 1993 would have been 35.9% lower, saving 15,092 lives.”

I was mystified that anyone could have read this paper and concluded that 92% of all fatal cycling crashes happen in low light conditions. It turns out Lumo hadn’t arrived at that conclusion on their own.

When I checked back with Lumo to make sure that was the figure they had in mind, they told me they’d not actually seen the Owens & Sivak paper, but had relied on a 2010 Australian paper that cited it.

That paper is ‘Cyclist visibility at night: Perceptions of visibility do not necessarily match reality’, Wood et al (2010) Australian College of Road Safety pp56-60, which says:

The role of visibility in contributing to fatal accidents was examined further by Owens and Sivak, who found that 78.8% of all fatal collisions involving vulnerable road users (cyclists or pedestrians) occurred during low-light conditions. When visibility was degraded further by poor atmospheric conditions, such as rain or fog, 92.3% of all fatal accidents involving a vulnerable road user occurred in low-light conditions.

In fact, as you can see from reading their paper, Owens and Sivak found no such thing, as they were only examining fatal collisions during twilight.

You can’t really blame Lumo for jumping on a number that gave them such a brilliant piece of marketing ammunition, and the paywalled nature of scientific research makes it hard to dig deeper, even if you want to. As Lumo’s Doug Bairner told me in an email: “it’s tough as a small start up to get your hands on papers as a non-academic so we have had to do our research based on whatever we could get our hands on”.

This episode demonstrates a couple of important things. One is to examine any research claim sceptically, even if it supports your point or objective. Especially then, in fact. Another is, always read the original paper. It may well not say what someone else says it does, even when that person is also a scientist.

More broadly, the paywalls around scientific research make it hard for lay people to read papers relevant to their interests. And by ‘interest’ I don’t mean idle curiosity, though there’s nothing wrong with that. Campaigners, journalists, members of the public affected by research-based decisions need to be able to scrutinise it to draw informed conclusions, and locking it away makes that harder.

Thanks to Bez, Ian walker and Will Nickell for feedback and help with on this article. Any remaining errors or inadequacies of explanation are entirely mine.

John has been writing about bikes and cycling for over 30 years since discovering that people were mug enough to pay him for it rather than expecting him to do an honest day's work.

He was heavily involved in the mountain bike boom of the late 1980s as a racer, team manager and race promoter, and that led to writing for Mountain Biking UK magazine shortly after its inception. He got the gig by phoning up the editor and telling him the magazine was rubbish and he could do better. Rather than telling him to get lost, MBUK editor Tym Manley called John’s bluff and the rest is history.

Since then he has worked on MTB Pro magazine and was editor of Maximum Mountain Bike and Australian Mountain Bike magazines, before switching to the web in 2000 to work for CyclingNews.com. Along with road.cc founder Tony Farrelly, John was on the launch team for BikeRadar.com and subsequently became editor in chief of Future Publishing’s group of cycling magazines and websites, including Cycling Plus, MBUK, What Mountain Bike and Procycling.

John has also written for Cyclist magazine, edited the BikeMagic website and was founding editor of TotalWomensCycling.com before handing over to someone far more representative of the site's main audience.

He joined road.cc in 2013. He lives in Cambridge where the lack of hills is more than made up for by the headwinds.

More Opinion

Latest Comments

- HoarseMann 1 hour 44 min ago

Woman gets fine for illegally parking on Winnats Pass and will never return to park there after ordeal (umm, that's how parking fines are supposed...

- Stebbo 1 hour 46 min ago

His career has been over for a few years.

- jarpots 2 hours 9 min ago

Nice product but has limitations, like most. Particularly if you use heavy electric bikes.

- AidanR 2 hours 40 min ago

Not sure why they don't take a cue from elsewhere in Belfast and paint the kerb stones.

- NickJP 3 hours 54 min ago

I like the Daysaver multitools: https://daysaver.fun/.

- wtjs 4 hours 38 min ago

All the manufacturers are non-American!

- chrisonabike 4 hours 54 min ago

Yes... and they're a *bit* insulated from the "must grab attention" imperative...

- stevez123 4 hours 55 min ago

I wish my tires were as easy to mount on the rim as yours. I usually end up with blisters on my thumbs after repairing a flat. Next time use a tire...

- David W 4 hours 12 min ago

This tragic case just makes me more concerned about the attitudes of some drivers now. Just last week, while cycling home from work (at about...

- Destroyer666 8 hours 47 min ago

In the eyes of an idiot like you? No worries.